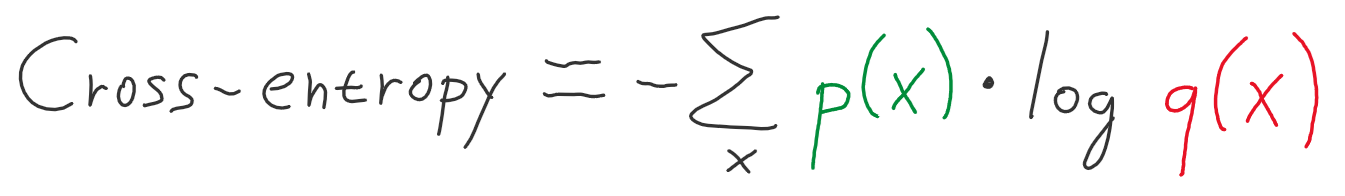

Drawing on the recently discovered continuous-categorical distribution, we propose probabilistically-inspired alternatives to these models, providing an approach that is more principled and theoretically appealing. This practice is standard in neural network architectures with label smoothing and actor-mimic reinforcement learning, amongst others. Here we focus on one such example namely the use of the categorical cross-entropy loss to model data that is not strictly categorical, but rather takes values on the simplex. %X Modern deep learning is primarily an experimental science, in which empirical advances occasionally come at the expense of probabilistic rigor. Where green is the target value, orange is the predicted value, red is the output of CCE, and blue. The Graph here shows categorical cross entropy plotted on x and y axis. def addsampleweights (image, label): The weights for each class, with the constraint that: sum (classweights) 1.0 classweights tf.

They use the following to add a 'second mask' (containing the weights for each class of the mask image) to the dataset.

%C Proceedings of Machine Learning Research This figure shows a generic classification network, and how the CCE is likeley to be used. I think this is the solution to weigh sparsecategoricalcrossentropy in Keras. %B Proceedings on "I Can't Believe It's Not Better!" at NeurIPS Workshops %T Uses and Abuses of the Cross-Entropy Loss: Case Studies in Modern Deep Learning

0 kommentar(er)

0 kommentar(er)